When you run campaigns or other events that may result in traffic peaks that are much higher than your site usually gets, you can prepare by estimating the impact the traffic will have on the hosting. There are a lot of variables that may impact the performance of your website, and subsequently the server load. In this article, we outline what you should worry about, what not to worry about, and how to make fairly precise estimates of the impact of your campaign on server resource usage.

There are always trade-offs between performance and scalability. When you rig your website for high traffic you have to prioritize scalability before end-user performance, you can often not get both. Hosting consists of many services that work together to provide the web pages to the end-user, and all hosting has its bottlenecks. When you prepare a site for scaling, the focus is to get rid of the bottlenecks in the order that they may appear.

At Servebolt we’re using page views per second as a key metric when we estimate web traffic. With that metric estimated, you use this article to ensure there’s a match between your traffic and the capacity of your web server, giving you insight into how many requests a server can handle.

The magic formula we use for our estimates that captures the essence and provides us an approximate number of how many users you can have simultaneously on your site in Google Analytics is:

(number of CPU cores / Average Page Response Time in seconds) * 60 * User Click Frequency in seconds = Maximum simultaneous users

Front-end performance, as you measure with Pingdom Tools or PageSpeed Insights does not matter much when it comes to scaling. The bottleneck is not how your front-end works but how much traffic and load your web server can handle.

How to estimate your web servers’ capacity

We have a few simple methods that are delightfully simple but usually produce quite precise estimates of how much traffic your hosting setup can deal with. The bottlenecks that you first run into when scaling traffic are usually 1) PHP and 2) database performance. There are many techniques you can use to try to reduce the load on these, but let’s do the math first.

How to check the number of server CPU cores on the webserver

A server has a certain amount of CPUs available. Let’s use a Dual 8-core CPU E5 box as an example.

bash-4.2$ grep processor /proc/cpuinfo | wc -l 32

In this example, the server has 32 cores available. The number of CPU cores sets the limit for how much PHP you can run before the server reaches its max capacity. The CPU frequency (GHz) will impact the overall performance of your website but is not relevant for the calculation of the max capacity.

You can also use a function like lscpu

bash-4.2$ lscpu Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Byte Order: Little Endian CPU(s): 32 On-line CPU(s) list: 0-31 Thread(s) per core: 2 Core(s) per socket: 8 Socket(s): 2 NUMA node(s): 2 Vendor ID: GenuineIntel CPU family: 6 Model: 79 Model name: Intel(R) Xeon(R) CPU E5-2620 v4 @ 2.10GHz Stepping: 1 CPU MHz: 1230.084 CPU max MHz: 3000.0000 CPU min MHz: 1200.0000 BogoMIPS: 4201.95 Virtualization: VT-x L1d cache: 32K L1i cache: 32K L2 cache: 256K L3 cache: 20480K NUMA node0 CPU(s): 0-7,16-23 NUMA node1 CPU(s): 8-15,24-31 Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc aperfmperf eagerfpu pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch epb invpcid_single intel_pt kaiser tpr_shadow vnmi flexpriority ept vpid fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 erms invpcid rtm cqm rdseed adx smap xsaveopt cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local dtherm ida arat pln pts

This is somewhat harder to read for the untrained eye, but the number of sockets * number of cores * the number of threads per core will also produce 32.

How much CPU time does an average PHP request to your site consume?

The other metric we need for making the estimate is the amount of time the CPU uses to produce “the average” webpage on your site. The simplest way to do this is to check a few different pages (use the pages you expect your visitors to hit) and calculate an average.

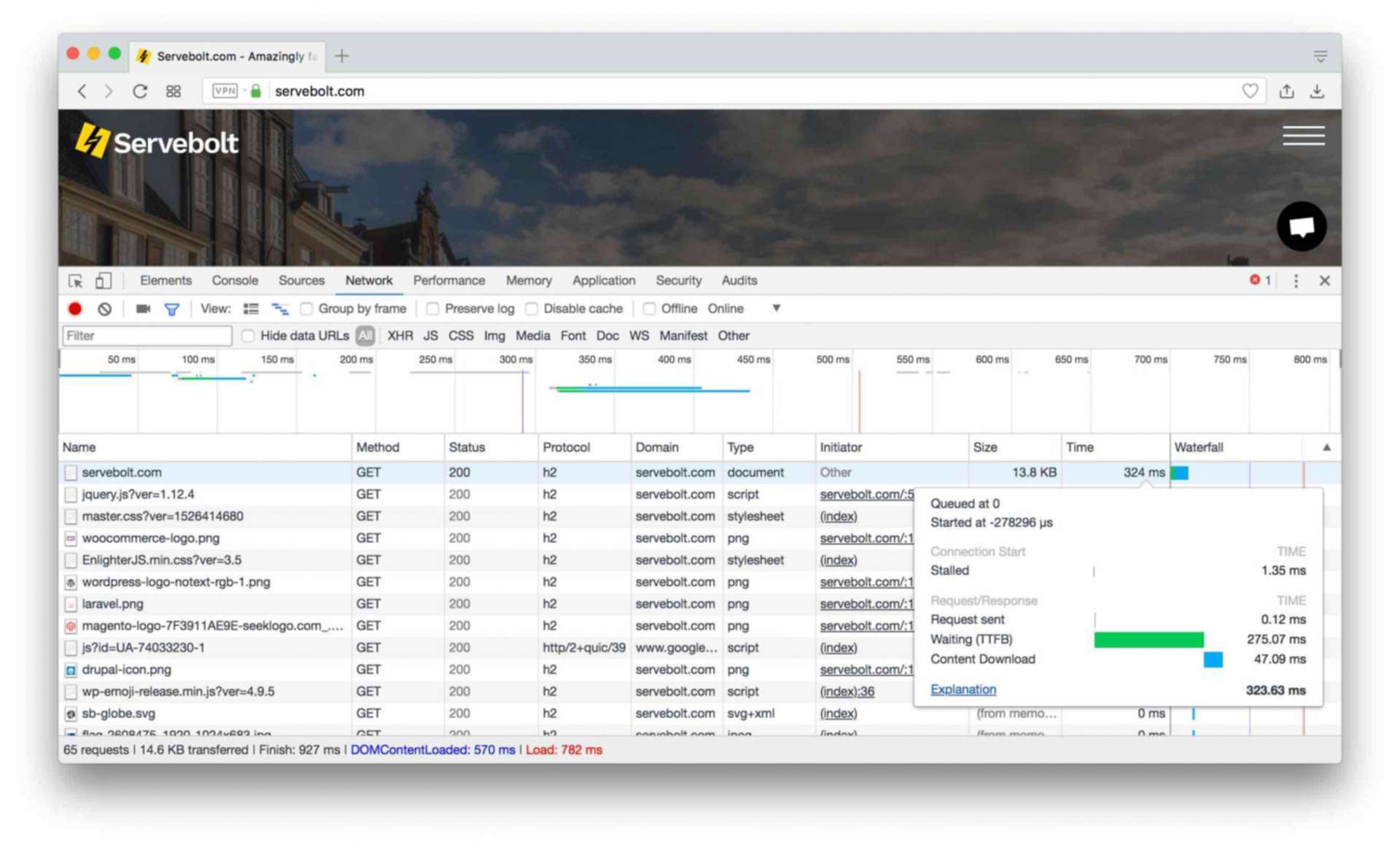

The number we use for estimation is the sum of Time To First Byte + the Content Download time. In the example below we can read that the time spent to produce the front page is 323 ms which is 0.3 seconds.

For eCommerce stores, it is important to check the performance of the front page, category pages, and product pages, add to cart and checkout, and make a realistic estimate. If your site uses full page caching, you should add a cache buster (just add some parameters to the URL, for instance https://servebolt.com/?cache=busted). This will make the request hit PHP instead of your full page cache.

What is the relation between CPU cores and the time of PHP requests?

When a visitor hits your web page, the server is busy working with producing that web page until you have received it. For the example above, the CPU is busy for 323 milliseconds while producing this page for you. With 1 CPU core, the limit of the server would be to deliver 3 pages per second.

The formula for calculating the max capacity of your web server

Number of CPU cores / Average time for a page request (in seconds) = Max number of Page Requests per second

The server’s capacity is 32 CPU cores, so when every request to the website on average uses 0.323 seconds of CPU time, we might expect it to be able to deal with approximately 32 cores / 0.323 seconds CPU time = 99 requests per second.

Why is the number of page requests per second an important metric for Scalability?

The scalability of your website usually boils down to when your server hits the CPU limit. The average page request time captures both the time PHP consumes and the time the database uses for the queries.

How many users can I have on my website simultaneously?

The question is, how do page views per second relate to Google Analytics? To figure out how many simultaneous users you can have on your site, you need to check your Google Analytics and calculate how often your users click on average.

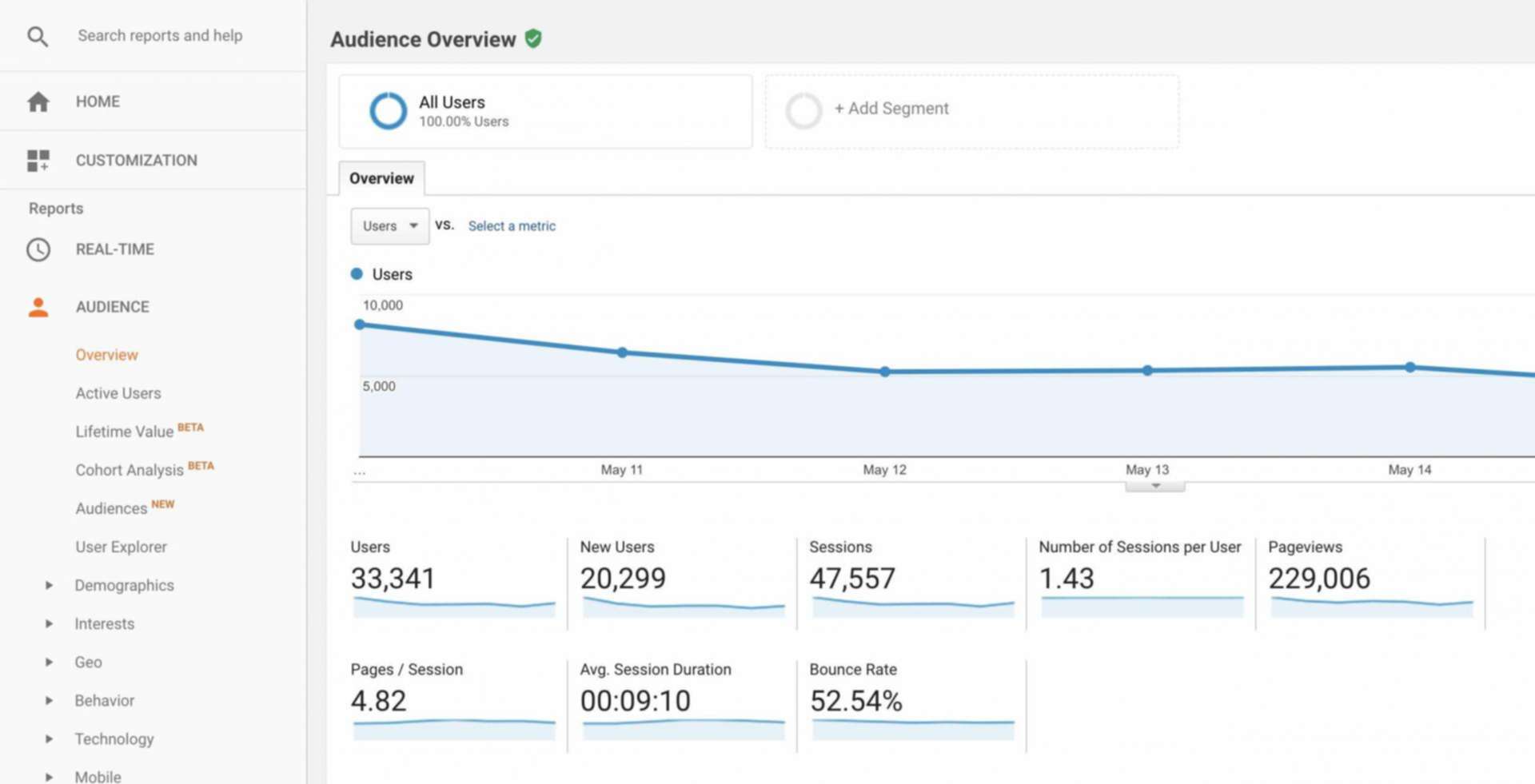

If you navigate to Audience > Overview, you will find Average Session Duration and Pages per Session.

The session duration in this example is 9 minutes and 10 seconds; this is 9×60 + 10 = 550 seconds. Every user, on average, clicks 4.82 times. Divide the Average Session Duration / Pages per Session, and you will get 114s. This means that the average user on your website will click approximately once every 2 minutes (every 114 seconds). This number can vary a lot, but for eCommerce, the rule of thumb is once per minute.

When we know that a user in Google Analytics clicks once every second minute, and we know that the servers’ capacity is 99 requests per second, we can calculate a fairly precise estimate;

Number of max requests per second * 60 * Click frequency of users in seconds = Maximum Number of Simultaneous Users

99 requests per second * 60 seconds * click interval in minutes 2 = 11 880 Max Simultaneous Users in Google Analytics

There are a lot of questions you can raise regarding this way of calculating, but from our experience, this way of calculating gives fairly precise estimates. You should, however, always round numbers pessimistically to stay on the safe side!

How many users can I have on my VPS?

If you run your site on a VPS with just one or a few CPU cores, the capacity limit may be reached much earlier. For example:

Average PHP request time: 650ms

CPU cores: 2

Click Frequency: 45 seconds (normal for eCommerce)

2 cores / 0.65 = 3 page views per second * 60 * 0.75 = 135 Max simultaneous users.

How to improve the scalability of your website

There are basically two things you can do to improve the scalability of your website. Either your website must consume fewer resources per visitor, or you have to increase the amount of server resources.

For eCommerce hosting server resources is crucial because most requests are dynamic and will run PHP. HTML Caching (also called full page caching) will help you scale a little bit, but with many sessions and carts, the server resources will usually be spent quickly anyway. Therefore, it is always a good idea to make your web application use fewer resources and make it faster. One very efficient way to apply multiple scaling techniques in one go is to use Accelerated Domains, it will help you stay on a single server setup for longer.

The moment your site exceeds the limits of a single server setup, your costs will increase not only for your hosting plan or server. It will also increase your costs for consultancy, maintenance, and development – given it is a more complex setup.

Our standard hosting plans give your site access to 24, 32, or even more CPU cores. A healthy (fast site without bugs) eCommerce site will normally scale to many hundred or even a few thousand users without any problems or worries! Create a trial account and test us out!

If you are looking to solve both performance and scalability with a single solution, then explore our newly launched service Accelerated Domains. This unique service allows your website to scale without any constraints and compromises on performance. It improves the cache hit ratio by 60%, leaving your server available to serve more visitors, and its smart location-aware caching network is capable of serving your customers from 180+ nodes globally.

Happy scaling!