Since we launched our new WordPress-based Servebolt website last year, we have been continuously experimenting, extending, adding, and removing features. Many of these have been javascript based and have added quite a bit of loading time to our website. With GDPR right around the corner, we decided to consolidate and select fewer services to track site visitors – and improve the speed of our websites in one go.

We couldn’t agree more with this tweet. The logic is obvious, better control of information and fewer scripts is a good thing – so we decided to do what we preach and to remove dead weight and experiments from our own websites.

Increasing Performance for the International Audience

Servebolt.no launched in July 2017, and we extended it with Servebolt.com in November and Servebolt.se at the beginning of 2018. Last year 15% of our website traffic was International; the rest was from Norway. This March, International traffic accounted for more than 66% – because the new sites have been attracting more traffic and new customers every week.

In the last few weeks, our total traffic has close to doubled due to a sharp increase in both new customers and Servebolt blog readers. Our popular articles have an international audience, and up until this weekend – they have all been served directly from our data center in Oslo, Norway. We have not been using a CDN for our own sites for the simple reason that it up until recently, would not give our visitors any performance benefits since most of them were closer or as close to our server than the CDN nodes.

With the recent changes in traffic patterns, shifting from Scandinavia and Northern Europe to global traffic, there were several reasons to improve performance on our sites for the global audience. We pinpointed a few things that would be quite easy to fix;

- Our DNS requests for servebolt.com were looked up with varying response times. Our DNS provider had three servers in different locations and no smart routing of DNS traffic. This resulted in a randomly varying DNS lookup time.

- We served content from multiple of our own domains for single-page views. This added extra DNS lookups for every visitor.

- Long-distance access was underperforming and doomed to be slower than necessary because we did not use any CDN.

We decided that Easter was a good time to switch DNS providers and add the Cloudflare CDN with Railgun.

Collecting Performance Data from Our Site Visitors

We have always had some basic monitoring of our own sites but have not been tracking any RUM (Real User Monitoring) data. We use Google Analytics for tracking website visits, but the speed data in Google Analytics samples only 1% of traffic by default, which makes the speed reports very random and hard to use because there is too little data if you try to filter the data by location, browsers or similar.

We considered adding Pingdom RUM tracking but decided that it was a good idea not to add more scripts to our website. After all, the process was triggered by the lust to reduce the usage of external scripts and by increasing overall performance. Therefore we decided to go with the features of scripts we already had in place, which was Google Analytics.

To make Google Analytics increase the sample rate of the speed performance metrics, you’ll have to add the “SetSiteSpeedSampleRate” to a number between 1 and 100. The number represents the % of requests that will be sampled. We set this value to 100, so all visitors that are tracked by Google Analytics will provide a sample with performance metrics.

In addition, we are monitoring some of our sites with Pingdom. Pingdom has a “Page Speed” report which is run every half hour, and which can be run from different regions around the world. Our primary aim was to improve performance for the US visitors, so we added pagespeed reports that run from both the West and East coast of the US.

Optimising our Own Websites for Performance

The most common problem with website performance is the Time To First Byte (TTFB). A high TTFB is usually caused by slow server performance, or it may be caused by high latency on the client-server network connection. All our pages typically have an uncached performance of less than 250 ms, but response time increases with physical distance – and therefore changes when the client is located far from our hosting location.

CDNs Add Latency to Your Dynamic Content

When you put a CDN in front of your website, it will add latency to the TTFB of your dynamic content. Accessing the origin server directly is faster than routing traffic over a CDN. The CDN will improve performance for cacheable elements, but a dynamic page will have to be fetched from the origin server, no matter what.

However, Cloudflare’s Railgun technology can reduce the added latency quite effectively. It does not improve the TTFB beyond the base latency you have to your server, but it will optimize the added latency. For long-distance traffic the impact of Railgun can save several roundtrips to the origin server, improving speed for subsequent requests. We saw latency reduction of more than a second when measured from the most remote places on earth.

Get rid of experiments and convenient solutions

Javascripts are the single largest source of latency on websites. All online service providers want to place a script on your website. Google, Facebook, Linkedin, Adwords, Tag Manager – and you name it. We had our own mix of services we had tested and used for some period of time and services we just had added out of pure and simple convenience.

There are usually better and more specific ways to achieve the same goals as these scripts give you. We decided to kill many of them, consolidate everything into a few services, and implement scripts more specifically than adding them to all our pages.

Our list of tools before we started:

- Google Tag Manager – a tool to easily add scripts

- Google Analytics – track website visitors

- Adwords conversion tracking – track Adwords conversions

- Mautic – track marketing data and provide forms

- Tapfiliate – affiliate network conversion tracking

- Crisp – chat widget

- Hubspot – track marketing data

- Linkedin ad tracking pixel

- Facebook tracking pixel

BLUSH! Just through the last six months, we had added all these scripts to our sites, and they were slowing things down. We love prototyping and testing, but experiments need to be cleaned up every once in a while. We always tell our clients to add scripts to their websites with care, but this time we obviously had not followed our own advice.

Several of these were non-essential, and we decided to get rid of most of them, consolidating the functionality in fewer scripts and alternative implementations. We had to redo the forms we use on our website but moved them from Mautic to WordPress instead. Also, Google Tag Manager and Linkedin were effectively removed, and Facebook tracking was reduced to be used on just a few pages. A great win for GDPR, better privacy for our website visitors – and better control of user data.

Do like Servebolt preaches – fix all bugs

It is amazing how many people believe computers have infinite computing power. This is far from true. It is not true for servers and not for browsers on modern devices. Servers have not been getting significantly faster in the last 10 years. The devices we now use (mobile phones) are getting faster than they were at some point, but they are still way slower than a normal laptop.

In our opinion, every programmer or front-end developer with little respect for his own profession should spend more time on becoming a better coder. Bugs and overuse of resources are what are making the internet slow. Computers are fast, but with today’s insane use of libraries, plugins, and extensions, things can easily get out of hand. We had a big laugh in the office the other day when one of the guys found an application that drew in 250MB of external libraries to say, “Hello World.”

PHP Bugs

We have quite good routines when it comes to PHP development and continuous performance testing of our own sites, so our website ErrorLogs are mostly empty. For most website developers, this would be a great place to start. The principle is that any bug will cause added latency and that the performance impact of PHP notices, warnings, and errors is completely underestimated. We can guarantee faster performance if you fix all those bugs in your error log and as an added bonus – your website will work better for the visitors.

HTML, CSS and JS Bugs

Fixing frontend bugs, like HTML, may sound weird to people. Many themes come with bugs out of the box, and people tend to neglect them. The same principle is valid for this kind of bug, any error or exception will consume processing power – which will slow down the rendering of your site. This is especially true for mobile devices.

By using the W3C Markup Validation Service we could easily identify a bunch of errors on our own pages and got rid of about 46 warnings and errors on our front page. The result after fixing them was more instant and snappy rendering and display of our page.

Also, we stripped out a bunch of no-longer-used CSS and JS and made a few notes about inefficient code we’d like to refactor and improve at some point.

Analyzing the Waterfall

We had already fixed the worst underperformers in the Waterfall, the scripts. But we found a few more performance-reducing elements. Redirect bugs, requests that went to HTTP:// instead of HTTPS:// – not producing any visual errors, but adding extra roundtrips to the loading. This may seem insignificant, but each of these elements required at least one extra roundtrip because of the redirect. We got rid of 6 out of 8 redirects; the last few were caused by Google’s scripts.

We also found a couple of files that were served from the wrong multi-lingual domain (servebolt.no instead of servebolt.com). This added an unnecessary DNS lookup to the page.

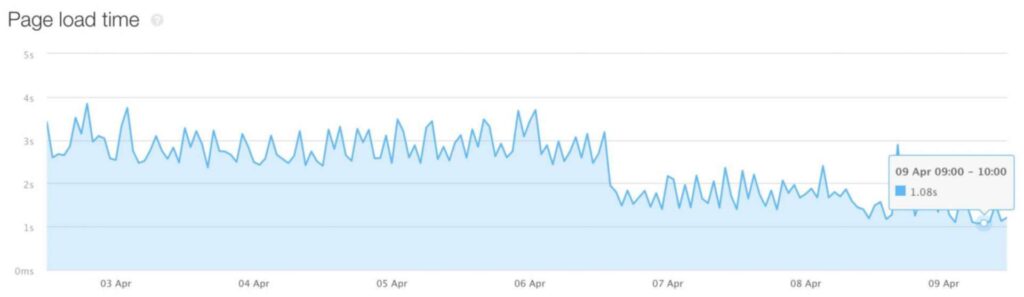

Results – Cutting page load time to 1 second, 25% of where we started

We added tracking just a day before the switch to the CDN, but as you can see from the graph – there was a slight drop in page load time between the 1st and 2nd of April. On April 6/7, we removed the first batch of unused scripts from our site. That almost reduced the page load time from close to 4 seconds to less than 2.

It was great fun to see measurable improvements, so we kept on working. Eliminate some more scripts, and fix tons of front-end bugs. The following graph is produced from a test of Servebolt.com from the Eastern US. Before we started, the page load time was between 4 and 5 seconds in total, now, it finished at around 1 second.

Takeaways

Our mission was a simple one, make our public Servebolt websites faster and more privacy-minded for everyone.

- If you have global website traffic, use a CDN with TTFB optimization (like Cloudflare’s Railgun)

- Eliminate as many scripts as possible from your website. It will make you leak fewer data about your visitors and improve performance for them.

- Fix bugs – the impact of bugs is much greater than you think

- Implement the functionality you have decided to use in PHP or on the specific pages that need it.

Have you tried these tips and still have a slow site? Check out our High Performance hosting for WordPress. We guarantee it will speed up your site significantly, and we’ll even give you a free test just to show you how fast your site can be with us.